(SEATTLE) — The pipeline from the initial conception of a scientific study, to the headlines that introduce the study’s results to the public, is like a steep-peaked mountain with peer-reviewed journals balancing precariously atop the summit.

At the base of the west slope sits a scientist with an idea. They ask a question, a hypothesis is formed, an experiment begins. They’re on the hunt for a pattern, a clue, a breakthrough — hoping to be remembered for their contribution to science, just as we remember Newton and Einstein. But, scientific research requires funding, so their idea must be worthy of investment.

Funds are granted mainly through corporations and government agencies by a panel of scientists who review grant requests, but these grants are extremely competitive and hard to get. Funding is crucial for a scientist’s career, not only because it makes the research possible, but also since a large portion of the awarded funds go directly to the scientist’s university or institution. Additionally, their success or failure in receiving a grant could significantly impact the security of their faculty position, raising the stakes even more.

During this slow and difficult climb up the mountain, trekking past the pressures of approval and grants, scientists maintain their focus on the ultimate prize, the peak, publication in a peer-reviewed journal. A lot rides on a scientist’s ability to reach this end goal, since being a published researcher gains you merit and respect amongst your peers, and secures future research, since you’re more likely to receive funding if you’ve already got some publications under your belt.

It really is, as they say, publish or perish.

Once a study makes its way through the peer-review system and publication in an academic journal, it’s a rapid descent down the other side and into the public eye, generally in one of two ways. Journalists will source directly from the peer-reviewed journal, or from an institution or university press release that showcases newly published research, highlighting studies with particularly interesting or news-worthy results, according to Ferris Jabr, freelance science writer and contributor to the New York Times Magazine.

As the crux of the science dissemination pipeline, peer-review has significant influence over the way journalists report on studies and results. Unfortunately, the pressure that dangles over researcher’s heads to fill their resume with as many publications as they can, has caused the whole system to essentially break down. Therefore, it’s up to journalists to be aware of the imperfections throughout the peer-review system, and to respond by being critical of published studies and report with ethical intent.

“Publish or perish means there are too many papers,” says Ivan Oransky, editor of the blog Retraction Watch, “and the system can’t tolerate it.” Oransky and his colleagues run the most comprehensible database of papers that have been retracted from peer-reviewed journals, giving him direct insight into how frequently flawed science makes its way into reputable journals and consequently into the media.

To Oransky, who has been documenting retractions for over a decade, the flaws in peer-review are in no way new. But, the explosion of new research devoted to COVID-19, along with the unprecedented quick turnaround in publications of COVID studies, resulted in 400,000 papers being published in a 20-month span, according to Oransky. This unusually fast burst of publications brought to light the serious issues within the peer-review system. “The pandemic made everything more visible,” says Oransky, “All of the issues that Retraction Watch has been looking at since 2010, they’re public now.”

There are about 3 million papers published in peer-reviewed journals every year, according to Oransky, and that number has been steadily increasing for decades. He attributes this to both an increase in journals, and the fact that journals tend to be publishing more content.

More content is great. But, how much of that discovery turns out to be unreliable?

“The number was 2700 in 2020, but we have 3000 retractions a year now,” says Oransky. The steady increase of retractions from year to year is a pattern that can be observed back to the beginning of Retraction Watch, and there’s no indication of slowing down. Currently, their database contains 31,000 retractions. As a percentage of total papers published a year, that’s miniscule. But, that’s not the “right” number of retractions, he says. “There should be far more, and anyone who thinks that’s controversial hasn’t been paying attention.”

Oransky estimates that the true number of papers that should be retracted is 10-fold what we’re aware of.

The main reason it’s nearly impossible to know the full breadth of unreliable studies in peer-reviewed journals is because there is no universal indexing system between journals. They all have their own way of classifying and documenting retracted papers, and there isn’t a network of communication throughout the system as a whole.

Elisabeth Bik, science integrity consultant and author of the blog Science Integrity Digest, says, “It’s not an initiative run by the scientific publishers, they don’t really want to cooperate. It’s run by volunteers like us.”

We can thank volunteers like Oransky and Bik for documenting and discussing retracted papers, two-thirds of which are due to misconduct. This can include p-hacking — manipulating data in order to achieve the statistical significance p<0.05 which is commonly required for publication — fabrication, plagiarism, or other unethical acts. For example, Bik specializes in identifying cases of photo manipulation and duplication, recently calling to question a study published in Scientific Reports that she suspects of using images with “repetitive elements, suggestive of cloning,” — in other words, photoshop.

Yes, sometimes scientists are just sloppy, and careless mistakes slip through. Other times, such as in the case of photoshopping or data duplication, the act is intentional on behalf of the author. It’s these cases that concern Bik. “These are the dumb fraudsters,” she says about the authors that she does bust, “But I think there’s much more fraud than we could ever capture. For every dumb fraudster, there’s 10 more that are smart.”

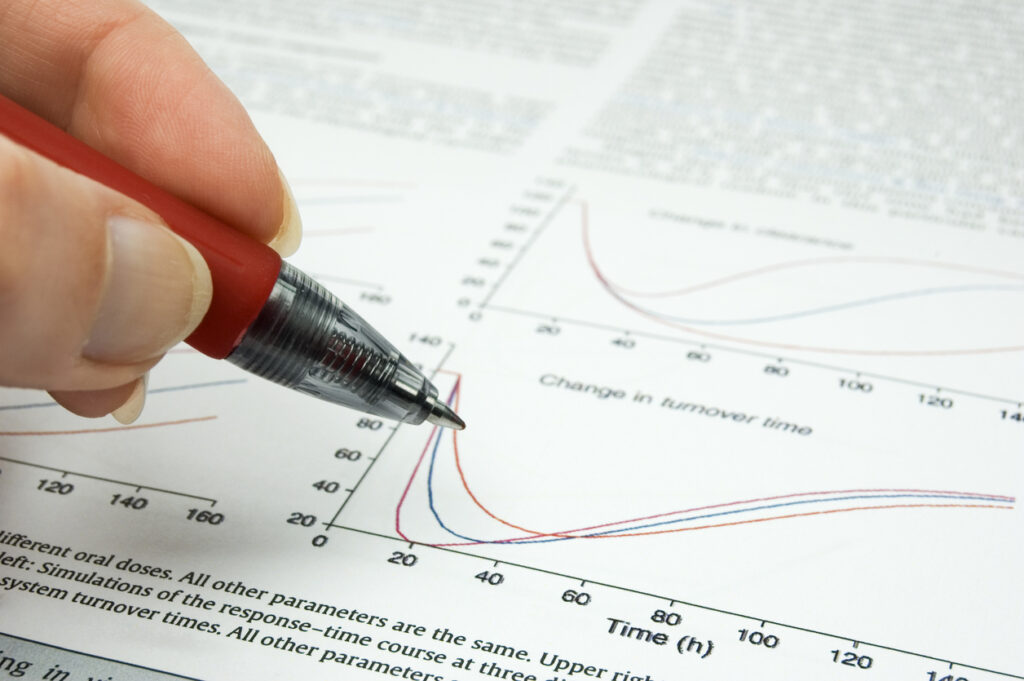

Misconduct can be hard to spot, especially for journalists who typically aren’t trained in science or statistics. As someone who has reported on many studies throughout his career, Jabr stresses the importance of talking to experts. “Reach out to independent experts that were not involved in that study,” he says, “and get their perspective on that research.” He also suggests journalists examine how the authors arrived at their conclusion from the data given. “A lot of the time, it comes down to interpretation. How do they interpret the data that they’ve collected?”

Beyond just a few flaws in a single paper, a major red flag is when an author develops a track record of paper retractions. Retraction Watch keeps a handy leaderboard of authors with the most retractions, the top three with over 100 each. You can’t help but wonder how a repeat offender of scientific misconduct is able to continuously slip through and receive publication.

So, the obvious question here is: How does duplicated data or manipulated photos go undetected by the peer-reviewer — the one person tasked with identifying such mistakes?

“One of the many flaws in the peer-review system is that it’s the same smallish group of experts that are being asked to review,” says Bik. And the “usual suspects,” as she names them, “are heavily biased towards North America and Europe, English-speaking, mainly male.” There are well-established scientists in non-eurocentric countries who are being left out of the peer review process, she explains, but those who have traditionally peer-reviewed are asked to peer-review more. Don’t let it go unnoticed how this mirrors the pattern seen earlier of researchers who are published being more likely to receive funding in the future.

To illustrate the lack of effort in finding diverse and qualified experts, Oransky shares that in early 2021, he was asked to peer-review 5 papers on COVID-19. “What is that about,” he says, “I’m not at all qualified to do that.” Turns out, because he had co-authored an article about retracted COVID-19 papers, he appears in databases as “COVID-19 expert.”

“I’m probably not the only person that’s happening to, and I hope I’m not the only one who says no,” says Oransky.

Increasing the bias within the reviewer selection process, Bik says, is the ability authors have to suggest a reviewer they think would be a good match for their paper. This can be dangerous for the obvious reason of favoritism in the author’s choice of who reviews their paper, but also for the less obvious reason of “peer-review rings” — a case where an author makes a second email account and is able to peer-review their own paper.

The fact of the matter is, the pool of reviewers isn’t large enough to handle the swarm of papers, especially when, according to Bik, each review takes four to five hours. With the system backlogged, editors then have a hard time finding a reviewer who’s available. As an editor, Bik says, “In some cases I have to find 20 peer-reviewers to end up, in the end, having two accepting.”

With biased data, photoshopped images, authors editing their own papers, and an overall rushed process, journalists face a big obstacle of reporting on peer-reviewed studies in an accurate and reliable way. A strong tool in overcoming this: mindfulness.

“Make sure that you’re understanding the study properly, and take advantage of the fact that there are people out there who have devoted their lives to understanding this work, and they’re available to help,” says Jabr. He also suggests looking at the context in the research literature, saying, “[See] what other studies have to say about the phenomenon you’re writing about.” And lastly, “Take time to research and understand, and come out with something a bit more comprehensive and useful, rather than rushing to be the first one out of the pen.”

It’s also useful for journalists to keep track of studies they have reported on in the past to ensure there isn’t future reporting on a retracted paper. Oransky suggests using programs such as Zotero (open-source, free), Papers, and EndNote, where journalists can create a database of papers they’ve reported on and receive an automated alert when any have been retracted.