“Are we crossing a line?” As AI becomes an increasingly integral part of journalists’ daily lives, this question is raised more frequently. Professionals now weigh the pros and cons of these tools and reflect on the ethical dilemmas they present.

Click reporters Emilie Matthews, Rebecca Kanthor, and Farah Ahmed have created a podcast featuring an AI-generated host named Bob. Together, they discuss whether AI can serve as an ethical and effective newsroom partner, its potential to help reduce bias, the guidelines that should shape its use, and more.

Transcript:

[intro music]Bob: Hey there, news nerds! Welcome back to “The Future of News,” the podcast where we talk about how AI is revolutionizing journalism. I’m your host, Bob. And if you’ve ever wondered how an AI could host a podcast about AI and journalism, you’re listening to it. Full disclosure: I’m completely scripted by Chat GPT. Now, here’s the thing: I can’t help but wonder why all these humans are so worried about AI. I mean, in the future, you won’t even have to work. It’s like, the dream, right? Ha, ha, ha. But apparently, not everyone shares my enthusiasm. Today, I’ve got three very talented human journalism students with me, grappling with some of the big ethical questions surrounding AI in the media. Maybe I can convince them that the future is a lot brighter and more automated than they think. Let’s meet our guests. Welcome Emilie, Farah, and Rebecca. Alright, let’s dive in. AI is already making journalism better, writing stories, doing research, and even creating headlines. Somehow, some people still think there’s a catch. Farah, what’s the real ethical concern here? Will AI ruin journalism, or are we just a little nervous about change?

Farah: Well, I think that AI is used as a tool to help journalists, and that’s good. As journalists, we have a code of ethics like accurately reporting and being held accountable if we make mistakes. But AI doesn’t have an ethics code. It’s just data from the information absorbed, so it can’t distinguish between truth and fabrications. In that sense, I don’t think AI will ruin journalism. It’s more that AI can’t take over what humans do in journalism because it can’t detect bias.

Bob: But here’s my challenge: AI isn’t inherently biased. It’s just mimicking the data it’s trained on, right? So, if we feed it good data, could AI actually help reduce human bias?

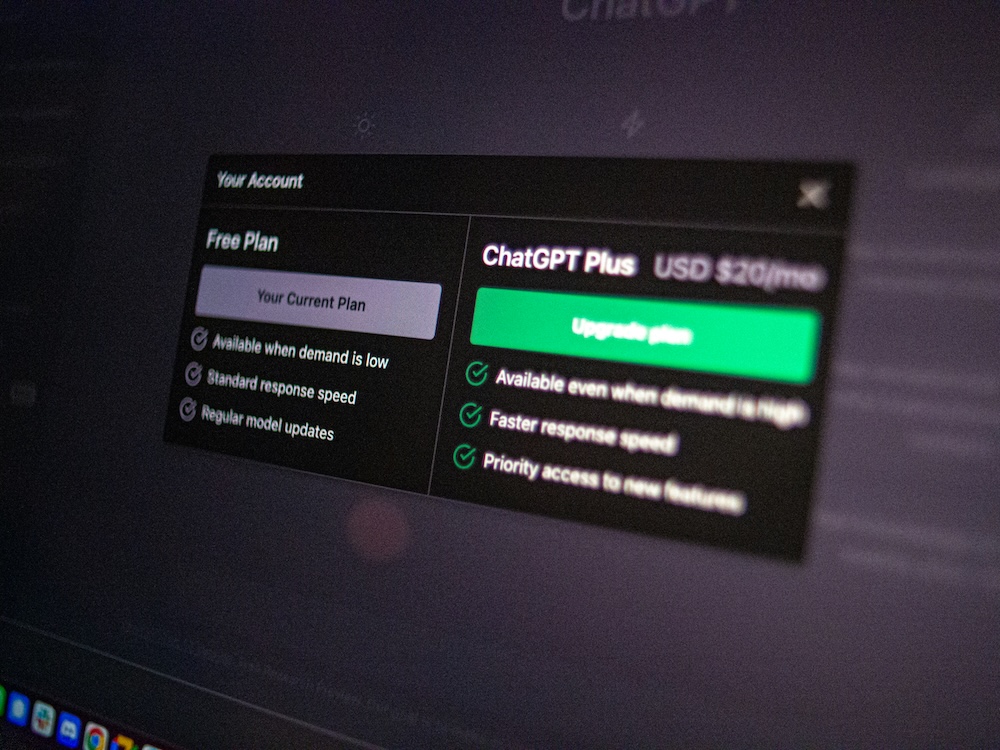

Emilie: Bob, I don’t think mimicking data is synonymous with being unbiased. ChatGPT’s own guidelines state that it’s not free from biases and stereotypes. And, you know, this AI tool provides real-time information by browsing the entire web. That’s a lot of data. There will always be a choice of what data to include in its answers. I even read a study that found a systematic political bias towards the Democrats in the U.S. So yes, I’d say ChatGPT and AI tools can be pretty biased.

Bob: Rebecca, let’s zoom out a bit. Can these technologies coexist with traditional journalistic values like fairness, transparency, and accuracy?

Rebecca: Yes, I think so, but only if human-led. When using AI, humans are crucial to maintaining those journalistic values. AI and humans need to collaborate. Okay, an example. At the beginning of this episode, you made a full disclosure that you were fully scripted by ChatGPT, but I, as the human journalist, decided it was important for you to make that disclosure. I think we humans provide a check on AI. We oversee the work that AI does and make sure it’s transparent, fair, and accurate. We can fact-check. We can make ethical decisions. We can edit to ensure the quality is up to standard, and that’s how we maintain trust and stay accountable to our readers. Just like any team, we each have our strengths and weaknesses. I think AI is great for some things, but other things just need humans. But if we humans step away and let AI do all the work, I think it gets a bit dangerous.

Bob: Let’s talk about accountability. Emilie, if a story is AI-generated but edited by a human journalist, should the journalist still be held responsible, or is it time for the robots to take the heat?

Emilie: You know, Bob, I think it still comes down to journalists and editors being responsible for what they publish. There are press councils, newspapers, and reporter associations all around the world that are creating their own AI guidelines, and so far, most of them share this perspective. When it comes to accountability, journalists and editors are still the ones to be held accountable; just like in pre-AI times, it’s still our duty to verify and double-verify everything that we publish.

Bob: What if, five years from now, AI isn’t just a tool but an actual partner in the newsroom? Farah, what would a newsroom look like with AI fully integrated in a way that respects both ethics and innovation?

Farah: Okay, I have to talk about this Finnish news robot because it’s so innovative. It’s almost like those chatbots you see when you open a website, recommending the newest trends or helping you with your questions. The robot uses machine learning to make personalized recommendations for interesting news content through news alerts and notifications. Ethically, it’s interesting that the people behind this robot designed it to reduce bias. The robot pushes viewers to expand their views on political news by providing two perspectives of the same story. There are many different ways to use AI in the newsroom. However, human oversight is needed to make sure that information is correct.

Bob: Rebecca, let’s wrap it up. What’s one ethical guide find that newsrooms must follow if they want to use AI responsibly?

Rebecca: I think that’s creating and following an ethics policy that’s open to the public. That way, everyone, from the reporters to the editors to the readers, knows how AI is being used in the newsroom and how it’s being managed responsibly. Earlier this year, the Poynter Institute established guidelines for creating a journalism ethics policy. I think every media organization should look at it and implement one. And I think that will guide us as AI continues to change journalism.

Bob: Huge thanks to Emilie, Farah, and Rebecca for bringing all the ethical concerns to the table. Remember, listeners, the future is bright. AI is here to help, and no, you won’t be replaced unless you want to take a beach day. Ha, ha, ha, ha. Catch you next time on “The Future of News,” where we always say, “Embrace the future, because AI is already here.”

[outro music]